© 2020 - DashTech. All Right Reserved.

Internet

How To Install Game Content Dlc Updates In 2024

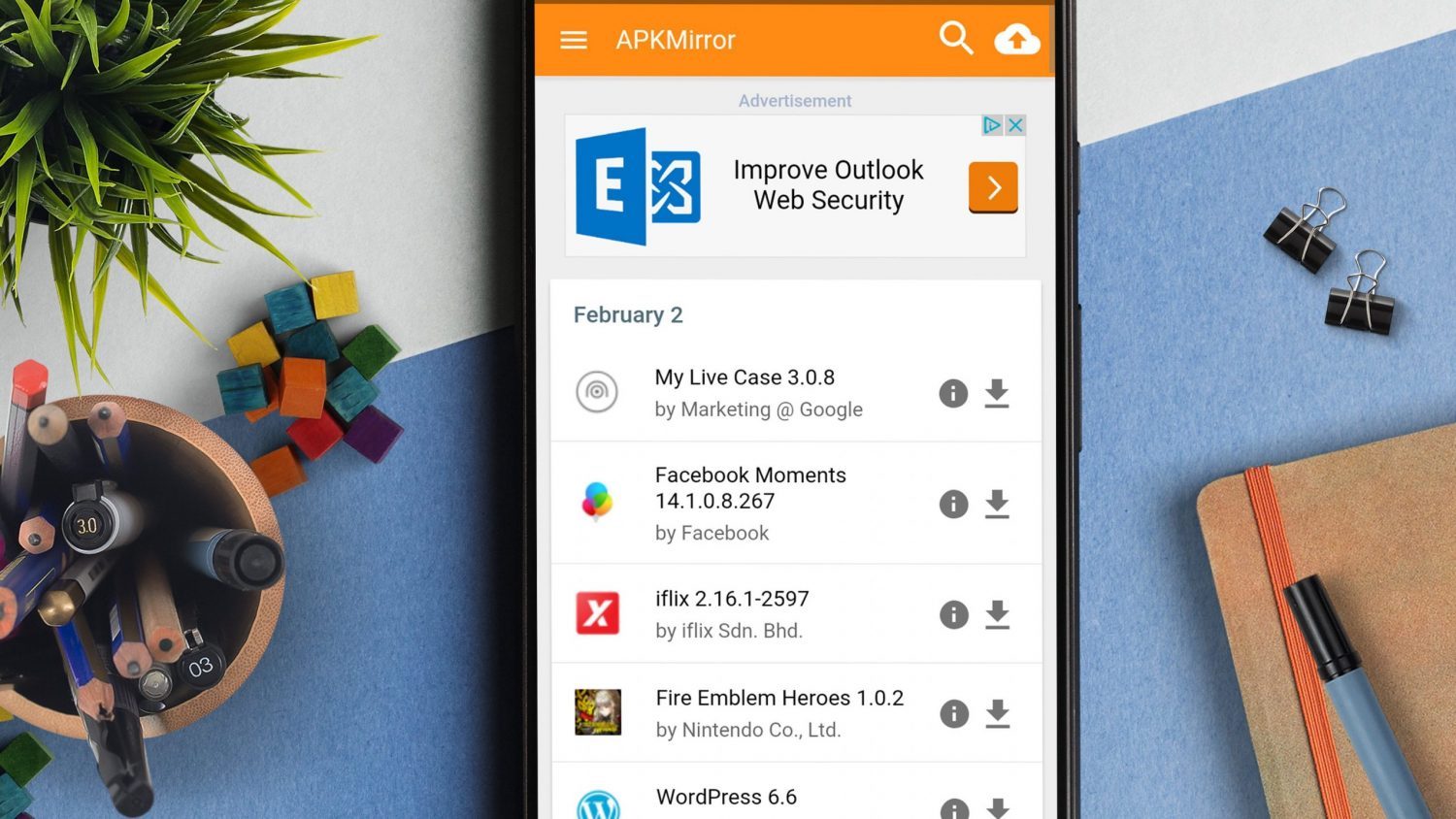

How To Install Game Content Dlc Update will be described in this article. If you have recently installed YuZu Android...

Read moreGadgets

Entertainment

Northern Tool: Your Ultimate Destination for Tools and Equipment

Are you in need of reliable tools and equipment for your projects? Look no further than Northern Tool, the leading...

Salesloft: Revolutionizing Sales Engagement Platforms

Salesloft is a groundbreaking platform that is transforming the way sales teams engage with prospects and customers. It offers a...

Best Online Fax Services For Your Business in 2024

In some cases, you need online fax services. The chances are, nevertheless, that you do not have a fax machine....

Phones

MORE NEWS

Top 15 Best Chatville Alternatives In 2024

This article shows chatville alternatives. It is a totally free cam group where great deals of genuine amateur users are...

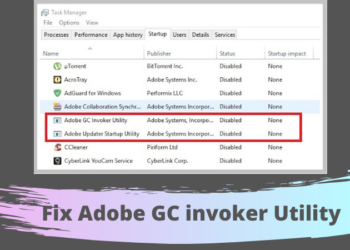

What is Adobe Gc Invoker Utility?

This article will show details regarding gc invoker utility. When you deal with an issue of a program freeze while...

Top 15 Best USE Browser Alternatives in 2024

Best and popular USE browser alternative will be discussed in this article. USE Browser is a quick web browser for...

12 Best Limetorrents Alternatives Working When TPB Is Down [2024]

This article contain information about limetorrent and pirate proxy 2020, limetorrent, utorrent. The preliminary gush web site on our listing...

Top 11 Best eWritingService Alternatives in 2024

Best and official eWriting service alternatives will be explained in this article. No matter what your place is, you can...

25 Best Rarbg Alternatives Working When TPB Is Down

This article contain information about Rarbg and pirate proxy 2020, qbittorrent, utorrent. The preliminary gush web site on our listing...

Top 15 Best TreeSize Alternatives in 2024

Best and most efficient Treesize alternatives will be described in this article. TreeSize is a faster disc space management platform...